There’s a lot to think about in the ongoing debacle over exam results in the UK. A quick update for those who have not been following the story. Examinations for young people, including the O level and A level academic exams and the more vocationally oriented Btec were cancelled this year due to the Covid19 pandemic. Instead teachers were asked to firstly provide an estimated grade for each student in each subject and secondly to rank order the students in their school.

There’s a lot to think about in the ongoing debacle over exam results in the UK. A quick update for those who have not been following the story. Examinations for young people, including the O level and A level academic exams and the more vocationally oriented Btec were cancelled this year due to the Covid19 pandemic. Instead teachers were asked to firstly provide an estimated grade for each student in each subject and secondly to rank order the students in their school.

These results were sent to a government central agency, the Office of Qualifications known as Ofqual. But instead of awarding qualifications to students based on the teachers’ predicted grades, it was decided by Ofqual, seemingly in consultation or more probably under pressure, by the government to use an algorithm to calculate grades. This was basically based on the previous results achieved by the school in each subject, with adjustments made for small class cohorts and according to the rankings.

The results from the A levels were released last week. They showed massive irregularities at an individual level with some students seemingly downgraded from predicted A* *the highest grade, to a C or D. Analysis also showed that those students from expensive private schools tended to do better than expected, whilst students from public sector schools in working class areas did proportionately worse than predicted. In other words, the algorithm was biased.

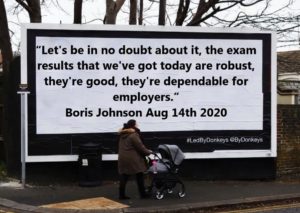

As soon as the A level results were announced there were protest from teachers, schools and students. Yet the government stuck to its position, saying there would be no changes. The Prime Minister Boris Johnson said “Let’s be in no doubt about it, the exam results wed have got today are robust, they’re good, they’re dependable for employers”. However, concern quickly grew about the potential of numerous appeals and indeed at the time it would take teachers preparing such appeals. Meanwhile the Scottish government (which is autonomous in education policy) announced that they would revert of the teachers’ predicted grades. In England while the government stood firm demonstrations by school students broke out in most cities. By the weekend it was clear that something had to change and on Monday the UK government, responsible for exams in England and Wales, announced that they too would respect teacher predicted grades.

The political fallout goes on. The government is trying to shift the blame to Ofqual, despite clear evidence that they knew what was happening. Meanwhile some of the universities who are reliant on the grades for the decision over who to offer places to, are massively oversubscribed as a result of the upgrades.

So, what does this mean for the use of AI in education. One answer maybe that there needs to be careful thinking about how data is collected and used. As one newspaper columnist put it as the weekend “Shit in, shit out”. Essentially the data used was from the exam results of students at a collective school level in previous years. This has little or no relevance as to how an individual student might perform this year. In fact, the algorithm was designed with the purpose not of awarding an appropriate grade for a student to reflect their learning and work, but to prevent what is known as grade inflation. Grade inflation is increasing numbers of students getting higher grades each year. The government sees this as a major problem.

But this in turn has sparked off a major debate, with suspicions that the government does in fact support a bias in results, aiming to empower the elite to attend university with the rest heading for a second class vocational education and training provision. It has also been pointed out that the Prime Ministers senior advisor, Dominic Cummings, has in the past written articles appearing to suggest that upper class students are more inherently intelligent than those from the working class.

The algorithm, although blunt in terms of impact, merely replicated processes that have been followed for many years (and certainly preceding big data). Many years ago, I worked as a project officer for the Wales Joint Education Committee (WJEC). The WJEC was the examination board for Wales. At that time there were quite a number of recognized examination boards, although since then the number has been reduced by mergers. I was good friends with a senior manager in the exam board. And he told me that every year, about a week before the results were announced, each exam board shared their results, including the number of students to be awarded each grade. The results were then adjusted to fit the figures that the boards had agreed to award in that year.

And this gets to the heart of the problems with the UK assessment system. Of course, one issue is the ridiculous importance placed on formal examinations. But it also reflects the approach to assessment. Basically, there are three assessment systems. Criteria based assessment means that any students achieving a set criterion are awarded accordingly. Ipsative based assessment, assesses achievement based on the individuals own previous performance. But in the case of UK national exams the system followed is norm referenced, which means that a norm is set for passing and for grading. This is fundamentally unfair, in that if the cohort for one year is high achieving the norm will be raised to ensure that the numbers achieving any particular grade meet the desired target. The algorithm applied by Ofqual weas essentially designed to ensure results complied with the norm, regardless of individual attainment. It has always been done this way, the difference this year was the blatant crudeness of the system.

So, there is a silver lining, despite the stress and distress caused for thousands of students. At last there is a focus on how the examination system works, or rather does not. And there is a focus on the class-based bias of the system which has always been there. However, it would be a shame if the experience prevents people from looking at the potential of AI, not for rigging examination results, but for supporting the introduction of formative assessment or students to support their learning.

If you are interested in understanding more about how the AI based algorithm worked there is an excellent analysis by Tom Haines in his blog post ‘A levels: the Model is not the Student‘.