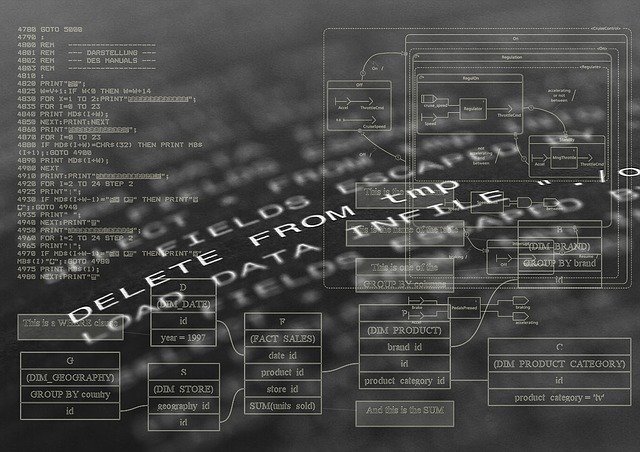

geralt (CC0), Pixabay

There seems to be a growing awareness of the use and problems with algorithms – at least in the UK with what Boris Johnson called “a rogue algorithm” caused chaos in students exam results. It is becoming very apparent that there needs to be far more transparency in what algorithms are being designed to do.

Writing in Social Europe says “Algorithmic systems are a new front line for unions as well as a challenge to workers’ rights to autonomy.” She draws attention to the increasing surveillance and monitoring of workers at home or in the workplace. She says strong trade union responses are immediately required to balance out the power asymmetry between bosses and workers and to safeguard workers’ privacy and human rights. She also says that improvements to collective agreements as well as to regulatory environments are urgently needed.

Perhaps her most important argument is about the use of algorithms:

Shop stewards must be party to the ex-ante and, importantly, the ex-post evaluations of an algorithmic system. Is it fulfilling its purpose? Is it biased? If so, how can the parties mitigate this bias? What are the negotiated trade-offs? Is the system in compliance with laws and regulations? Both the predicted and realised outcomes must be logged for future reference. This model will serve to hold management accountable for the use of algorithmic systems and the steps they will take to reduce or, better, eradicate bias and discrimination.

Christina Colclough believes the governance of algorithmic systems will require new structures, union capacity-building and management transparency.I can’t disagree with that. But also what is needed is a greater understanding of the use of AI and algorithms – for good and for bad. This means an education campaign – in trade unions but also for the wider public to ensure that developments are for the good and not just another step in the progress of Surveillance Capitalism.