The AI in education theme continues to gather momentum, resulting in a non stop stream of journal; articles, reports, newsletters. blogs and videos. However, while not diminishing, there seem to be some subtle change in directions in the messages.

Firstly, despite many schools wary of Generative AI, there is a growing realisation that students are going to use it anyway and that the various apps claiming to check student work for AI simply don't work.

At the same time, there is an increasing focus on AI and pedagogy (perhaps linked to the increasing sophistication of Frontier Models from Gen AI but also the realisation that gimmicks like talking to an AI pretending to be someone famous from the past are just lame!). This increased focus on pedagogy is also leading to pressure to involve students. in the application of Gen AI for teaching and learning. And at recent students two ethical questions have emerged. The first is unequal access to AI applications and tools. Inside Higher Ed reports that recent research from the Public Policy Institute of California on disparate access to digital devices and the internet for K-12 students in the nation’s largest state public-school system. Put simply, they say. students who are already at an educational and digital disadvantage because of family income and first-generation constraints are becoming even more so every day as their peers embrace AI at high rates as a productivity tool—and they do not.

And while some tools will remain free, it appears that the most powerful and modern tools will increasingly come at a cost. The U.K. Jisc recently reported that access to a full suite of the most popular generative AI tools and education plug-ins currently available could cost about £1,000 (about $1,275) per year. For many students already accumulating student debt and managing the rising cost of living, paying more than $100 per month for competitive AI tools is simply not viable.

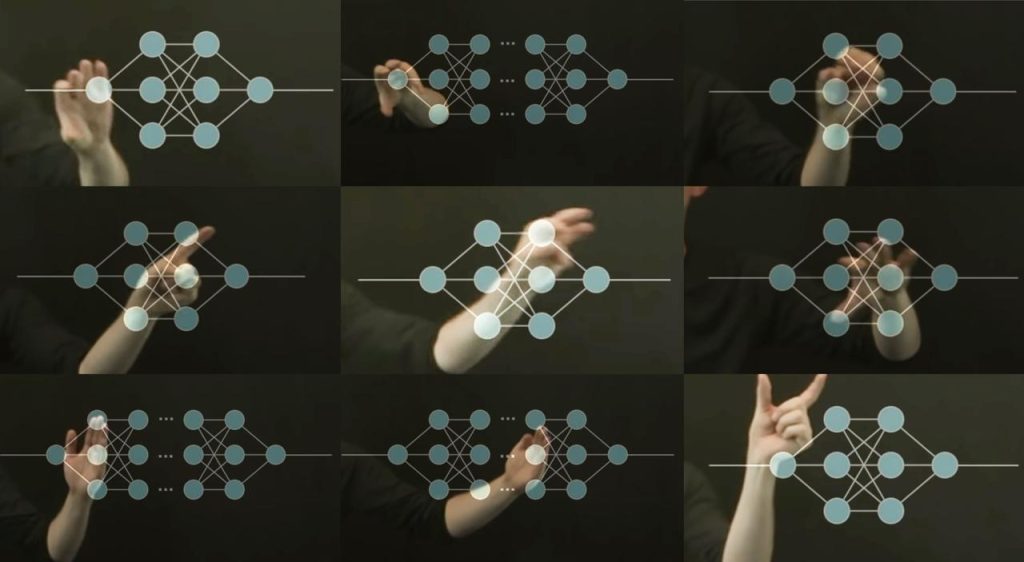

A second issue is motivation and agency for students in using AI tools. It may be that the rush to gamification, inspired by Apps like DuoLingo, is running thin. Perhaps a more subtle and sustained approach is needed to motivate learners. That may increase a focus on learner agency which in turn is being seen as linked to Explainable AI (or XAI for short). Research around Learning Analytics has pointed to the importance of students understanding the use purpose of LA but also being able to understand why the Analytics turns out as it does. And research into Personal Learning Environments has long shown the importance of learner agency in developing meta-cognition in learning. With the development of many applications for personalized learning programmes, it becomes important that learners are able to understand the reasons for their own individual learning pathways and if necessary challenge them.

While earlier debates about AI in Ed ethics, largely focused on technologies, the new debates are more focused on practices in teaching and learning using AI.