Teachers’ and Learners’ Agency and Generative AI

It is true that there is plenty being written about AI in education - almost to the extent that it is the only thing being written about education. But as usual – few people are talking about Vocational Education and Training. And the discourse appears to almostdefault to a techno-determinist standpoint – whether by intention or not. Thus while reams are written on how to prompt Large Language Models little is being said about the pedagogy of AI. All technology applications favour and facilitate or hinder and block pedagogies whether hidden or not (Attwell G and Hughes J. 2010) .

I got into thinking more about this as a result of two strands of work I am doing presently – one for the EU Digital Education hub on the explicability of AI in education and the second work for the Council of Europe who are developing a Reference Framework for Democratic Culture in VET. I was also interested in a worry expressed by Fengchun Miao, Chief, Unit for Technology and AI in Education at UNESCO, that machine-centrism is prevailing over human-centrism and machine agency undermining human agency.

Research undertaken into Personal Learning Environments (Buchem, I, Attwell, G. and Torres, R., 2011) and into the impact of online learning during the Covid 19 pandemic have pointed to the importance of agency for learning. For a fairer, usefully transparent and more responsible online environment. Virginia Portillo et Al (2024) say young people have “a desire to be informed about what data (both personal and situational) is collected and how, and who uses it and why, and policy recommendations for meaningful algorithmic transparency and accountability. Finally, participants claimed that whilst transparency is an important first principle, they also need more control over how platforms use the information they collect from users, including more regulation to ensure transparency is both meaningful and sustained.”

The previous research into Personal Learning Environments suggests that agency is central to the development of Self Regulated Learning (SRL) which is important for Lifelong Learning and Vocational Education and Training. Self Regulated Learning is “the process whereby students activate and sustain cognition, behaviors, and affects, which are systematically oriented toward attainment of their goals" (Schunk & Zimmerman, 1994). And SRL drives the “cognitive, metacognitive, and motivational strategies that learners employ to manage their learning (Panadero, 2017). “

Metacognitive strategies guide learners’ use of cognitive strategies to achieve their goals, including setting goals, monitoring learning progress, seeking help, and reflecting on whether the strategies used to meet the goal were useful (Pintrich, 2004; Zimmerman, 2008).

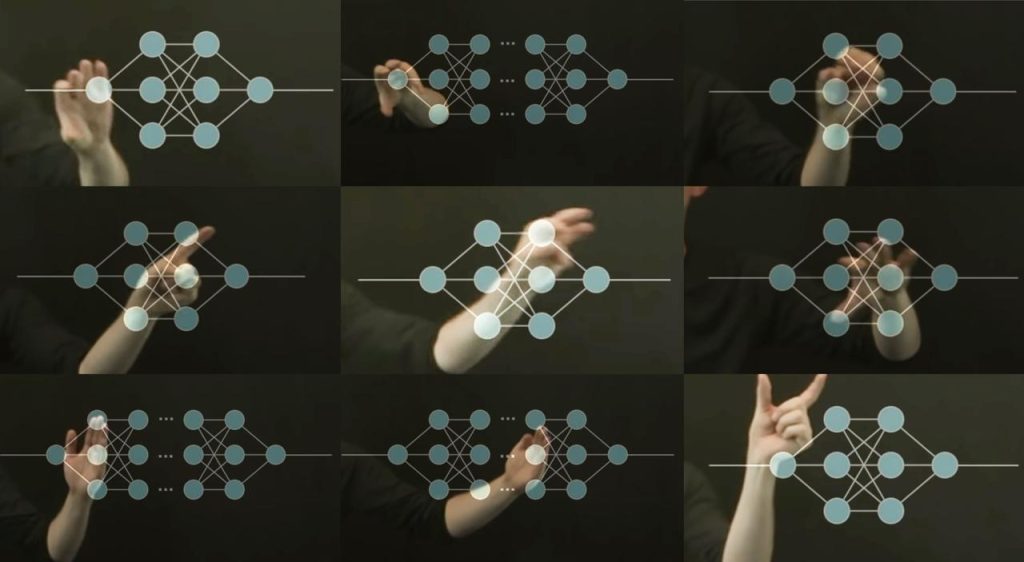

The introduction of generative AI in education raises important questions about learner agency. Agency refers here to the capacity of individuals to act independently and make their own free choices (Bandura, 2001). In the context of AI-enhanced learning, agency can be both supported and challenged in several ways. In a recent paper, ‘Agency in AI and Education Policy: European Resolution Three on Harnessing the Potential for AI in and Through Education’, Hidalgo, C. (2024) identifies three different approaches to agency related to AI for education. The first is how AI systems have been developed throughout their lifecycle to serve human agency. The second is human beings’ capacity to exert their rights by controlling the decision-making process in the interaction with AI. The third is that people should be able to understand AI’s impact on their lives and how to benefit from the best of what AI offers. Cesar Hildago says: “These three understandings entail different forms of responsibility for the actors involved in the design, development, and use of AI in education. Understanding the differences can guide lawmakers, research communities, and educational practitioners to identify the actors’ roles and responsibility to ensure student and teacher agency.”

Generative AI can provide personalized learning experiences tailored to individual students' needs, potentially enhancing their sense of agency by allowing them to progress at their own pace and focus on areas of personal interest. However, this personalization may also raise concerns about the AI system's influence on learning paths and decision-making processes. In a new book "Creative Applications of Artificial Intelligence in Education" Alex U. and Margarida Romero (Editors) explore creative applications of across various levels, from K-12 to higher education and professional training. The book addresses key topics such as preserving teacher and student agency, digital acculturation, citizenship in the AI era, and international initiatives supporting AI integration in education. The book also examines students' perspectives on AI use in education, affordances for AI-enhanced digital game-based learning, and the impact of generative AI in higher education.

To foster agency using Generative AI they propose the following:

1. Involve students in decision-making processes regarding AI implementation in their education.

2. Teach critical thinking skills to help students evaluate and question AI-generated content.

3. Encourage students to use AI as a tool for enhancing their creativity rather than replacing it.

4. Provide opportunities for students to customize their learning experiences using AI.

5. Maintain a balance between AI-assisted learning and traditional human-led instruction.

Agency is also strongly interlinked to motivation for learning. This will be the subject of a further blog post.

References

Alex U. and Margarida Romero (Editors) (2024) Creative Applications of Artificial Intelligence in Education, https://link.springer.com/book/10.1007/978-3-031-55272-4#keywords

Attwell G and Hughes J. (2010) Pedagogic approaches to using technology for learning: literature review, https://www.researchgate.net/publication/279510494_Pedagogic_approaches_to_using_technology_for_learning_literature_review

Bandura, A. (2001) Social Cognitive Theory of Mass Communication, Media Psychology}, volume 3, pp 265 - 299}, https://api.semanticscholar.org/CorpusID:35687430}

Buchem, I, Attwell, G. Torres R. (2011) Understanding Personal Learning Environments: Literature review and synthesis through the Activity Theory lens, https://www.researchgate.net/publication/277729312_Understanding_Personal_Learning_Environments_Literature_review_and_synthesis_through_the_Activity_Theory_lens

Hidalgo, C. (2024), ‘Agency in AI and Education Policy: European Resolution Three on Harnessing the Potential for AI in and Through Education’ In: Olney, A.M., Chounta, IA., Liu, Z., Santos, O.C., Bittencourt, I.I. (eds) Artificial Intelligence in Education. AIED 2024. Lecture Notes in Computer Science(), vol 14830. Springer, Cham. https://doi.org/10.1007/978-3-031-64299-9_27

Panadero, E. (2017). A review of self-regulated learning: Six models and four directions for research. Frontiers in Psychology. https://doi.org/10.3389/fpsyg.2017.00422

Pintrich, P. R. (2004). A conceptual framework for assessing motivation and self-regulated learning in college students. Educational Psychology Review, 16(4), 385–407.

Schunk, D. H., & Zimmerman, B. J. (1994). Self-regulation of learning and performance: Issues and educational applications. Lawrence Erlbaum Associates Inc. Zimmerman, B. J. (2008). Investigating self-regulation and motivation: Historical background, methodological developments, and future