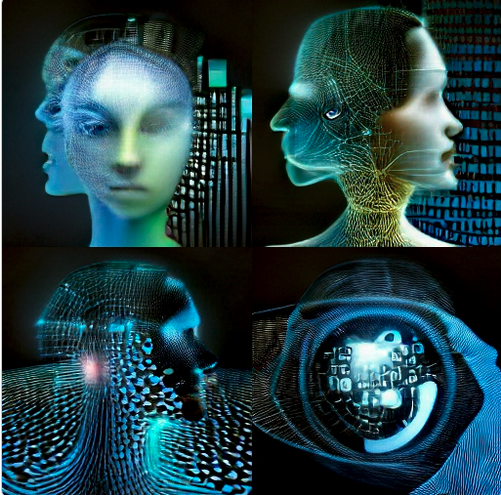

Digital Humanism

I used to be critical at the failure / slowness of sociology to develop critical accounts of the impact of digitalisation and the rise of the internet, This seems to be fast changing, especially in the growing critiques of the use of technology for learning.

I just received an email about a new book by Christian Fuchs entitled 'Digital Humanism. A Philosophy for 21st Century Digital Society.' Christian says:

Our contemporary global digital society is not always a good place to live. Authoritarianism, hatred, false news, post-truth culture, the COVID-19 anti-vaccination movement, COVID-19 conspiracy theories, and political polarisation are organised via the Internet. The public sphere is highly polarised. Today, many humans tend to think of other humans mainly in terms of friends and enemies. Robots and Artificial Intelligence-based automation have created new challenges for the world of work. Decades of neoliberalism have increased inequalities. The COVID-19 pandemic has shown the vulnerability of humanity to viruses and health crises.

Humanity and society are in a major crisis and digitalisation mediates this crisis. /Digital Humanism/ explores how Humanism can help us to critically understand how digital technologies shape society and humanity, providing an introduction to Humanism in the digital age. Fuchs introduces the approach of Digital Humanism and outlines foundations of a Radical Digital Humanism, analysing what decolonisation of academia and the study of the digital, media and communication means; what the roles are of robots, automation, and Artificial Intelligence in digital capitalism, and how the communication of death and dying has been mediated by digital technologies, capitalist necropower, and digital capitalism. In order to save humanity and society, we need Radical Digital Humanism now.

And Eva Illouz, Director of Studies at EHESS, Paris, says:

Digital Humanism is the book we have been waiting for. … Digital Humanism refuses to transform humans into machines and to think of machines as humans. This is why this book is such an important and timely intervention.

More information and sample reading can we got from https://fuchsc.uti.at/books/digital-humanism/