eAssessment

AI and Assessment

This podcast on AI and assessment was recorded for the Eramus+ eAssessment project. It forms part of an online course including four main sections:

- Assessment Strategies

- Analysing evidence

- Feedback and Planning

- Community of practice.

The course, hosted on the project Moodle site, is free of charge. Participants are welcome to follow the full course or can dip in and out of the sixteen different units.

The podcast discusses the impact of Generative AI and advanced chatbots like ChatGPT and Bing on education and how they are changing the way we assess learning.

Below is a transcript of the podcast.

Scene 1

In late November 2022, OpenAI dropped ChatGPT, a chatbot that can generate well-structured blocks of text several thousand words long on almost any topic it is asked about. Within days, the chatbot was widely denounced as a free essay-writing, test-taking tool that made it laughably easy to cheat on assignments. The response from schools and universities was swift and decisive. Los Angeles Unified, the second-largest school district in the US, immediately blocked access to OpenAI’s website from its schools’ network. Others soon joined. By January, school districts across the English-speaking world had started banning the software, from Washington, New York, Alabama, and Virginia in the United States to Queensland and New South Wales in Australia. Several leading universities in the UK, including Imperial College London and the University of Cambridge, issued statements that warned students against using ChatGPT to cheat.

Scene 2

This initial panic from the education sector was understandable. ChatGPT, available to the public via a web app, can answer questions and generate unique essays that are difficult to detect as machine-written. It looked as if ChatGPT would undermine the way we test what students have learned, a cornerstone of education.

However, there is a growing recognition that advanced chatbots could be used as powerful classroom aids that make lessons more interactive, teach students media literacy, generate personalized lesson plans, save teachers time on admin, and more. Educational-tech companies including Duolingo and Quizlet, which makes digital flashcards and practice assessments used by half of all high school students in the US, have already integrated OpenAI’s chatbot into their apps. And OpenAI has worked with educators to put together a fact sheet about ChatGPT’s potential impact in schools. The company says it also consulted educators when it developed a free tool to spot text written by a chatbot (though its accuracy is limited). Turnitin – a company leading in the provision of anti plagiarism software – already controversial – designed a new plug in supposed to alert to the possibility that assessments were using AI. Its accuracy is also questioned and Turnitin had to backtrack on not allowing users to opt out of new AI plugin.

Scene 3

“We need to be asking what we need to do to prepare young people—learners—for a future world that’s not that far in the future,” says Richard Culatta, CEO of the International Society for Technology in Education (ISTE), a nonprofit that advocates for the use of technology in teaching.

Take cheating. In Crompton’s view, if ChatGPT makes it easy to cheat on an assignment, teachers should throw out the assignment rather than ban the chatbot.

Scene 4

In response to the limitations of traditional assessment methods, there is a growing movement towards authentic and personalized assessment methods in courses. These methods include using real-life examples and contextually specific situations that are meaningful to individual students. Instructors may ask students to include their personal experience or perspectives in their writing. Students can also be asked to conduct analysis that draws on specific class discussions. Alternatives to essay-based assessment also need to be further explored. These methods can include using (impromptu) video presentations for assessments or using other digital forms such as animations. Through self-assessment or reflective writing, students could discuss their writing or thinking process. Additionally, peer evaluations or interactive assessment activities could be integrated into grading by engaging students in group discussions or other activities such as research and analysis in which students are expected to co-construct knowledge and apply certain skills. Instructors may consider placing an emphasis on assessing the process of learning rather than the outcome.

Scene 5

Most of the commentary and research into the impact of Generative AI so far comes from school and higher education. What bout vocational education and training. Well, firstly I would argue that VET has been better at formative assessment than in general education. And in many countries VET is based on students being able to demonstrate outcomes, usually through practical tests – this is much closer to authentic assessment. Of course, in many systems VET students are also required. To produce evidence of knowledge as well as practice and this may need some changes. But for vocational education and training, the challenge may not be so much how we are assessing but what we are assessing. Leaving aside the discussion about whether and AI is going to threaten jobs and affect employment – and to be honest no one is really sure to what extent this will happen and how many new jobs may be generated. But it is pretty clear that AI is going to impact on the content and tasks of many jobs…….. Vocational Education and Training has a key purpose in preparing students for the world of work. If that work world include people using AI in their occupation then that will form a key part of the curriculum. And authentic assessment means assessinghow they are working with AI in the work tasks of the future.

Scene 6

Lets sum up. We need to change how we assess learning. Did ChatGPT kill assessments? They were probably already dead, and they’ve been in zombie mode for a long time.

Public values are key to efficient education and research

For those of us who have been working on AI in Education it is a bit of a strange time. On the one hand it is not difficult any longer to interest policy makers, managers or teachers and trainers in AI. But on the other hand, at the moment AI seems to be conflated with the hype around Chat GPT. As one senior policy person said to me yesterday: "I hadn't even heard of Generative AI models until two weeks ago."

And of course there's a loge more things happening or about to happen on not just the AI side but in general developments and innovation with technology that is likely to impact on education. So much in fact that it is hard to keep up. But I think it is important to keep up and not just leave the developing technology to the tech researchers. And that is why I am ultra impressed with the new publication from the Netherlands SURF network - 'Tech Trends 2023'.

In the introduction they say

This trend report aims to help us understand the technological developments that are

going on around us, to make sense of our observations, and to inspire. We have chosen

the technology perspective to provide an overview of signals and trends, and to show

some examples of how the technology is evolving.

Surf scanned multiple trend reports and market intelligence services to identify the big technology themes. They continue:

We identified some major themes: Extended Realities, Quantum, Artificial intelligence,

Edge, Network, and advanced computing. We believe these themes cover the major technological developments that are relevant to research and education in the coming years.

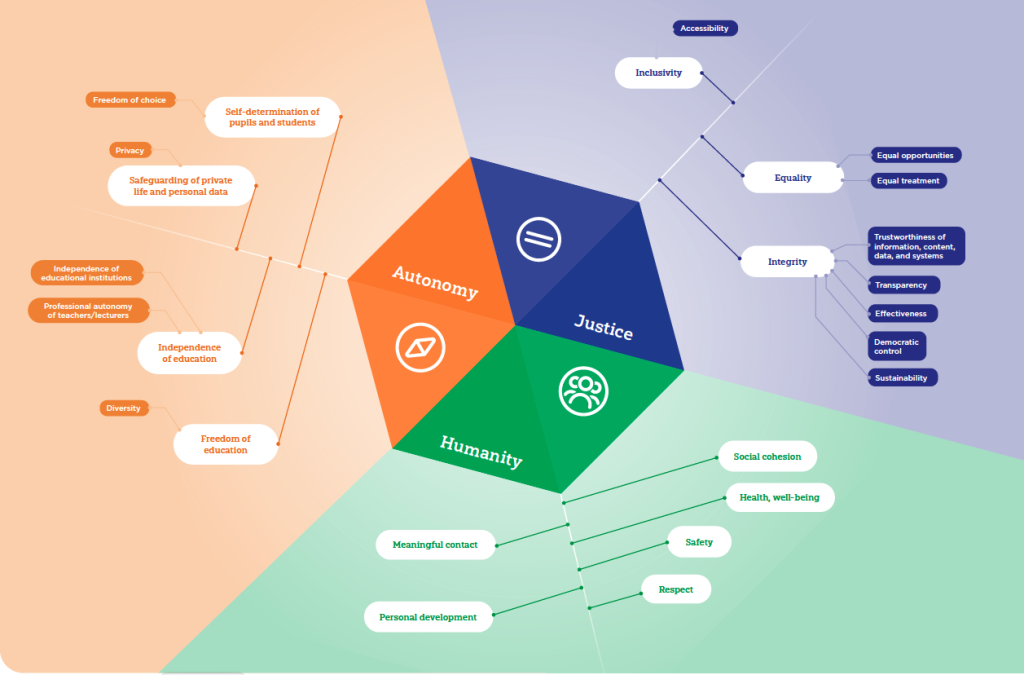

But what I particularly like is for each trend the link to to public values and the readiness level as well. The values are taken from the diagram above. As SURF say "public values are key to efficient education

and research."

The canary in the coalmine

AI for marking and feedback

The UK National Centre for AI, hosted through Jisc has announced the third in a series of pilot activities for AI in education. The pilot project being undertaken in partnership with Graide, an EdTech company who have built an AI-based feedback and assessment tool, es designed to help understand how universities could benefit from using AI to support the marking and feedback process.

AI-based marking and feedback tools promise the joint benefits of reducing educators’ workloads, whilst improving the quality, quantity, timeliness and/or consistency of feedback received by students.

After a positive initial assessment of Graide, we are launching this pilot to find out how Jisc’s members could benefit from this solution.

Universities in the UK have been invited to take part in the pilot in which following an initial webinar and interviews will a small number of participants will use Graide in practice, with an evaluation their experience. Stage two of the pilot will focus on exploring the platform’s functionality; in stage three, the platform will be used ‘live’ with at least one cohort of students.

Despite increasing interest in the potential of AI especially for providing automated feedback to students there remain limitations. It is notable that the pilot is focused on STEM and the UK National Centre for AI says that “The most appropriate types of assignments will be those where there is a definitive correct answer and where feedback would also be expected on the working out.”