Artificial Intelligence and ethics

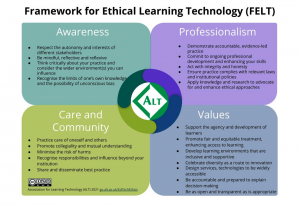

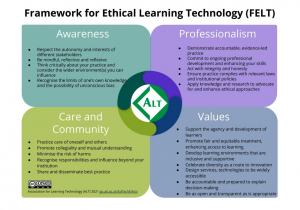

I have written before that despite the obvious ethical issues posed by Artificial Intelligence in general - and particular issues for education - I am not convinced by the various frameworks setting down rubrics for ethics, often voluntarily and often developed by professionals from within the AI industry. But I am encouraged by UK Association for Learning Technology's (ALT) Framework for Ethical Learning Technology, released at their annual conference last week. Importantly, it builds on ALT’s professional accreditation framework, CMALT, which has been expanded to include ethical considerations for professional practice and research.

I have written before that despite the obvious ethical issues posed by Artificial Intelligence in general - and particular issues for education - I am not convinced by the various frameworks setting down rubrics for ethics, often voluntarily and often developed by professionals from within the AI industry. But I am encouraged by UK Association for Learning Technology's (ALT) Framework for Ethical Learning Technology, released at their annual conference last week. Importantly, it builds on ALT’s professional accreditation framework, CMALT, which has been expanded to include ethical considerations for professional practice and research.

ALT say:

ALT’s Framework for Ethical Learning Technology (FELT) is designed to support individuals, organisations and industry in the ethical use of learning technology across sectors. It forms part of ALT’s strategic aim to strengthen recognition and representation for Learning Technology professionals from all sectors. The need for such a framework has become increasingly urgent as Learning Technology has been adopted on a larger scale than ever before and as the leading professional body for Learning Technology in the UK, representing 3,500 Members, ALT is well placed to lead this effort. We define Learning Technology as the broad range of communication, information and related technologies that are used to support learning, teaching and assessment. We recognise the wider context of Learning Technology policy, theory and history as fundamental to its ethical, equitable and fair use.

More details and resources are available on the ALT website.