AI: education and learning are not the same thing

As the debate rolls on about the use of AI in education,we seem stuck on previous paradigms abut how technology can be used to support the existing education system rather than thing about AI and learning. Bill Gates said last week "The dream that you could have a tutor who’s always available to you, and understands how to motivate you, what your level of knowledge is, this software should give us that. When you’re outside the classroom, that personal tutor is helping you out, encouraging you, and then your teacher, you know, talks to the personal tutor." This can be seen in the release of Apps designed to make the system run more efficiently and support teachers in producing lesson plans, reduce administration etc. And for learners a swath of tutor apps and agents to help navigate the way through to support skills and knowledge development.

But writing about the popular educational exercise of future forecasting in the European Journal of Education in 2022, Neil Selwyn outlined five broad areas of contention that merit closer attention in future discussion and decision-making. These include, he said:

(1) "taking care to focus on issues relating to 'actually existing' AI rather than the overselling of speculative AI technologies;

(2) clearly foregrounding the limitations of AI in terms of modelling social contexts, and simulating human intelligence, reckoning, autonomy and emotions;

(3) foregrounding the social harms associated with AI use;

(4) acknowledging the value-driven nature of claims around AI; and

(5) paying closer attention to the environmental and ecological sustainability of continued AI development and implementation."

In a recent presentation, Rethinking Education, rather than predicting the future of technology in education, Ilkka Tuomi reconsiders the purpose of AI in education which he says "changes knowledge production and use. This has implications for education, research, innovation, politics, and culture. Current educational institutions are answers to industrial-age historical needs."

EdTech he says, has conflated education and learning but they are not the same thing. He quotes Biesta(2015 who said "education is not designed so that children and young people might learn –people can learn anywhere and do not really need education for it –but so that they might learn particular things, for particular reasons, supported by particular (educational) relationships.” (Biesta, 2015)

He goes on to quote Arendt (2061) who said “Normally the child is first introduced to the world in school. Now school is by no means the world and must not pretend to be; it is rather the institution that we interpose between the private domain of home and the world in order to make the transition from the family to the world possible at all. Attendance there is required not by the family but by the state, that is by the public world, and so, in relation to the child, school in a sense represents the world, although it is not yet actually the world.”

Education 4.0 he says is supposedly about “Preparing children for the demands of the future. "Education becomes a skill-production machine." Yet "Skills are typically reflections of existing technology that is used in productive practice and "Skills change when technology changes." Tumomi notes "There are now 13 393 skills listed in the European Skills, Competences, and Occupations taxonomy."

Digital skills are special, he says "because the computer is a multi-purpose tool" and "AI skills are even more special, because they interact with human cognition."

Social and emotional “skills” rank-order people“. "'21st century skills' are strongly linked to human personality, which, by definition, is stable across the life-span and People can be sorted based on, e.g., “openness to experience,” “conscientiousness,” “agreeableness,” “verbal ability,” “complex problem-solving skills,” etc."

Their position is these list doesn’t change in education and "Instead, training and technology potentially increase existing differences.|"

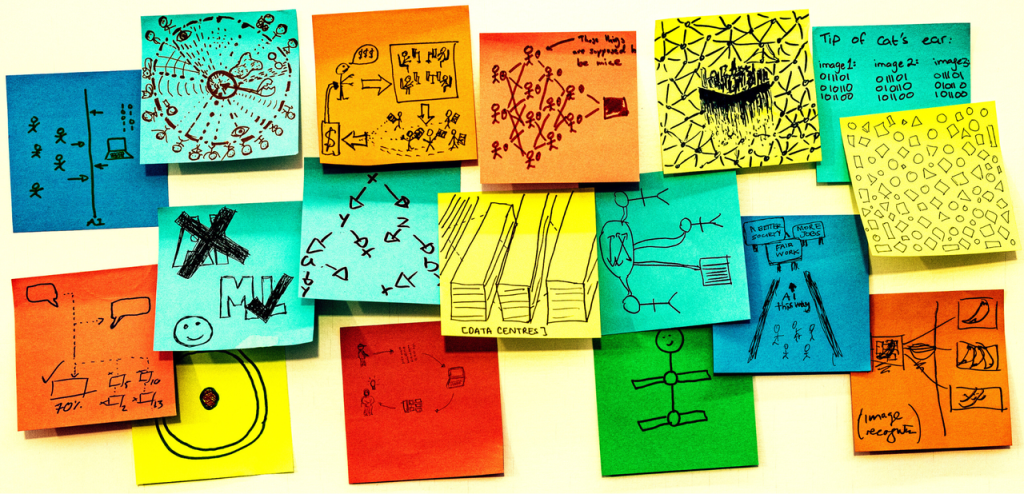

Tuomi draws attention to the the three social functions of education:

- "Enculturation: Becoming a member of the adult world, community of practice, or thought community

- Development of human agency: Becoming a competent participant in social and material worlds with the capability to transform them

- Reproduction of social structures: Maintaining social continuity; social stratification through qualification and social filtering'

- AI in education supports Enculturation through:

- "AI for knowledge transfer and mastery

- Development of human agency

- AI for augmentation of agency

- Reproduction of social structures

- AI for prediction and classification (drop-out / at-risk, high-stakes assessment)Incentives and motives in HE."

But while "Students used to be proud to be on their way into becoming respected experts and professionals in the society which For many families, this required sacrifice they are now facing LLMs that know everything." Why, he asks "should you waste your time in becoming an expert in a world, where the answers and explanations become near zero-cost commodities?" What happens to HE, he ask, "when AI erodes the epistemic function of education? The traditional focus of AI&ED in accelerating learning and achieving mastery of specific knowledge topics is not sustainable"

His proposal is that "The only sustainable advantage for primary and secondary education, will be a focus on the development of human agency. Agency is augmented by technology. Agency is culturally embedded and relies on social collaboration and coordination. Affect and emotion are important and the epistemic function will be increasingly seen from the point of view of cognitive development (not knowledge acquisition). Qualification has already lost importance as the network makes history visible. It still is important for social stratification (in many countries)."

He concludes by reiterating that "Education is a social institution. It should not be conflated with 'learning'. AI vendors typically reinterpret education as learning. Education becomes “personalized” and “individualized,” and the objective changes to fast acquisition of economically useful skills and knowledge. The vendors are looking for education under the lamp-post, but this lamp-post is something they themselves have set up. Very little to do with education."