Data governance, management and infrastructure

Photo by Brooke Cagle on Unsplash

The big ed-tech news this week is the merger of Anthology, an educational management company, with Blackboard who produce learning technology. But as Stephen Downes said "It's funny, though - the more these companies grow and the wider their enterprise capabilities become, the less relevant they feel, to me at least, to educational technology and online learning."

And there is a revealing quote in an Inside Higher Ed article about the merger. They quote Bill Bauhaus, Blackboards chairman, CEO and president as saying the power of the combined company will flow from its ability to bring data from across the student life cycle to bear on student and institutional performance. "We're on the cusp of breaking down the data silos: that often exist between administrative and academic departments on campuses, Bauhaus said.

So is the new company really about educational technology or is it in reality a data company. And this raises many questions about who owns student data, data privacy and how institutions manage data. A new UK Open Data Institute (ODI) Fellow Report: Data governance for online learning by Janis Wong explores the data governance considerations when working with online learning data, looking at how educational institutions should rethink how they can better manage, protect and govern online learning data and personal data.

In a summary of the report, the ODI say:

The Covid-19 pandemic has increased the adoption of technology in education by higher education institutions in the UK. Although students are expected to return to in-person classes, online learning and the digitisation of the academic experience are here to stay. This includes the increased gathering, use and processing of digital data.

They go on to conclude:

Within online and hybrid learning, university management needs to consider how different forms of online learning data should be governed, from research data to teaching data to administration and the data processed by external platforms.

Online and hybrid learning needs to be inclusive and institutions have to address the benefits to, and concerns of, students and staff as the largest groups of stakeholders in delivering secure and safe academic experiences. This includes deciding what education technology platforms should be used to deliver, record and store online learning content, by comparing the merits of improving user experience against potential risks to vast data collection by third parties.

Online learning data governance needs to be considered holistically, with an understanding of how different stakeholders interact with each other’s data to create innovative, digital means of learning. When innovating for better online learning practices, institutions need to balance education innovation with the protection of student and staff personal data through data governance, management and infrastructure strategies.

The full report is available from the ODI web site.

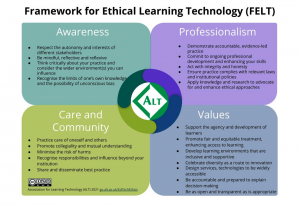

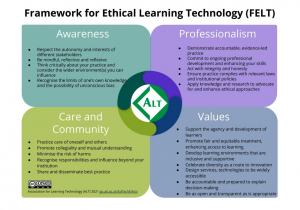

I have written before that despite the obvious ethical issues posed by Artificial Intelligence in general - and particular issues for education - I am not convinced by the various frameworks setting down rubrics for ethics, often voluntarily and often developed by professionals from within the AI industry. But I am encouraged by UK Association for Learning Technology's (ALT) Framework for Ethical Learning Technology, released at their annual conference last week. Importantly, it builds on ALT’s professional accreditation framework, CMALT, which has been expanded to include ethical considerations for professional practice and research.

I have written before that despite the obvious ethical issues posed by Artificial Intelligence in general - and particular issues for education - I am not convinced by the various frameworks setting down rubrics for ethics, often voluntarily and often developed by professionals from within the AI industry. But I am encouraged by UK Association for Learning Technology's (ALT) Framework for Ethical Learning Technology, released at their annual conference last week. Importantly, it builds on ALT’s professional accreditation framework, CMALT, which has been expanded to include ethical considerations for professional practice and research.